Effective Software Testing

My personal notes on the book Effective Software Testing.

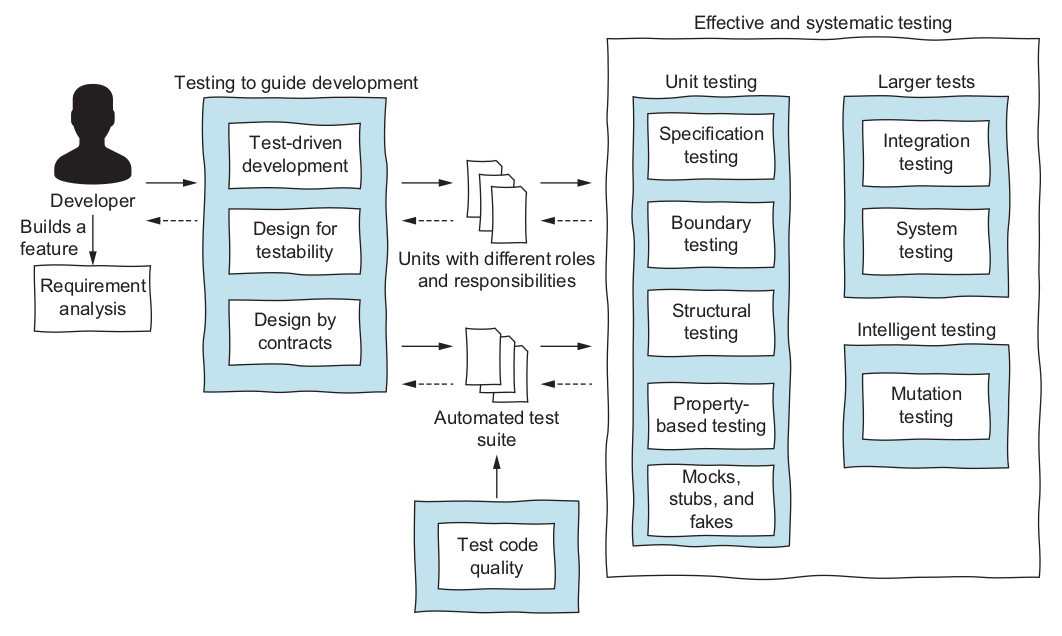

1. Effective and Systematic Software Testing

- Software testing is about finding bugs.

- Bugs love boundaries: test minimum and maximum values.

- Lists as inputs may contain zero elements, one element, many elements, different values, and identical values.

Software Testing for Developers

- Domain testing: break requirements in small parts, and use them to derive test cases.

- Structured testing = code coverage.

- Testing is an interactive process.

- Creativity and domain knowledge helps devising test cases.

- Non-smelly code is less prone to defects, but simplicity does not imply correctness.

- The cost of bugs often outweighs the cost of prevention.

- Focus on writing the right tests: learn to know what to test and when to stop.

- Automation is key for an effective testing process.

Principles of Software Testing

- Exhaustive testing is impossible. We need to prioritize.

- Know when to stop testing.

- Variability is important. Use different testing techniques.

- Bugs happen in some places more than others.

- No matter what testing you do, it will never be perfect or enough.

- Context is king.

- Verification is not validation.

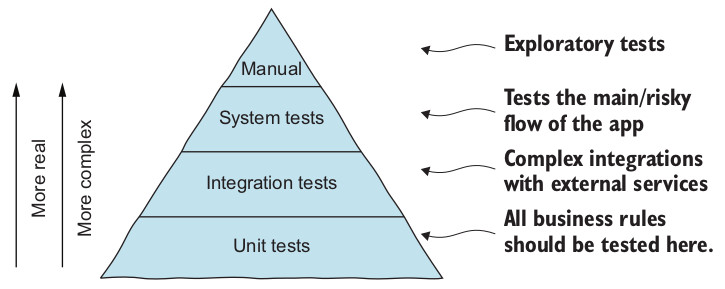

The Testing Pyramid

- Unit Testing: used to text a single unit in isolation, purposefully ignoring the other units of the system. They are fast, easy to control and to write, but lack reality and cannot catch some bugs.

- Integration Testing: used to test the integration between our code and external parties (e.g. a database). They are more difficult to write.

- System Testing: used to test a system on its entirety. We only care that, given an input

X, the system will provide an outputY. These tests are very realistic, thus give us more confidence on the system. On the other hand, they are very slow, harder to writem, and are more prone for flakiness.

2. Specification-based Testing

- The idea is to derive tests from the requirements themselves.

The Requirements Say It All

- Steps:

- Understand the requirements, inputs, and outputs.

- Explore what the program does for various inputs. Build a mental model of how the program should work.

- Explore possible inputs and outputs, and identify partitions. Partitions are nothing more than input clases (i.e. the “0-1-N case”):

- Null values

- Empty values

- Value with length 1

- Value with length > 1

- Analyse the boundaries. Test when the inputs go from one boundary to another.

- Devise test cases. Combine the partitions found on the previous step. In this step you may remove combinations that are superfluous (e.g. repeatedly testing

nullvalues). - Write automatic test cases. Prefer simple test methods that focus on one test case.

- Augment the test suite with creativity and experience.

Finding Bugs with Specification Testing

- Domain knowledge is still fundamental to engineer good test cases.

- The process should be iterative, not sequential.

- Specification testing should go as far as the importance of the code, i.e., how big the cost of failure is.

- Use variations of the same input to facilitate understanding.

- When the number of combinations explode, be pragmatic.

- When in doubt, go for the simplest input.

- Pick reasonable values for inputs you do not care about.

- Test for

nulls and exception cases, but only when it makes sense. - Go for parametrized tests when possible.

3. Structural Testing and Code Coverage

- Using the structure of the source code to guide testing is known as structural testing.

- Code coverage criteria:

- Line coverage

- Branch coverage

- Condition + branch coverage

- Path coverage: covers all possible paths of execution of a program.

- Modified condition/decision coverage (MC/DC) criterion looks at the combination of conditions, but only the most important ones that must be tested.

- Mutation testing

- We purposefully insert a bug on the code and check whether the test suite breaks.

- It is useful on sensitive parts of the system, because it is very expensive.

4. Designing Contracts

- Contracts ensure that that classes can safely communicate with each other without surprises.

- Pre and post-conditions

- Pre-condition: what the method needs to function correctly.

- Post-condition: what the method guarantees as outcome.

assert()is a simple option to implement pre/post condition.- Pre-conditions can be weak or strong depending on your requirements. In some cases, we cannot weaken the pre-condition.

- Invariants

- These are conditions that should hold before and after the method execution.

- One could add a

invariant()check at the end of the method.

- Changing contracts, and the Liskov Substitution Principle

- We somestimes need to change contracts.

- The easiest way to understand the impact of a change is looking at all the other classe (dependencies) that may use the changing class.

- LSP:

- The pre-conditions of subclass S should be the same as or weaker than the pre-conditions of base class B.

- The post-conditions of subclass S should be the same as or stronger than the post-condition of base class B.

- Pre-conditions, post-conditions, and invariants provide developers with ideas about what to test.

- Contracts complement unit testing.

- Validation and contract checking are different things with different objectives.

5. Property-based Testing

- In property-based testing, we express the properties that should hold for the tested method. The framework then randomly generates hundreds of different inputs.

- Creativity is required to write such tests.

- Example frameworks: jqwik, QuickCheck.

- Exercising boundaries is a good idea for property-based tests.

6. Test Doubles and Mocks

- Using objects that simulate the behavior of other objects give us more control and let our simulation run faster.

- Types of simulation

- Dummy objects are passed to the class under test, but are never used.

- Fake objects have real working implementations of the class they simulate, but usually in a much simpler way.

- Stubs provide hard-coded answers to the calls performed during the test.

- Mocks act like stubs, but they save all interactions and allow you to make assertions afterwards.

- Spies spy on a dependency, and map themselves around the real object and observe its behavior.

- Mocks in the real world

- Some test suites test the mock, not the code, making the tests less realistic.

- Carefully designed and stable contracts makes mocks safer to use.

- Tests that use mocks are more coupled with the code they test.

- What to mock:

- Dependencies that are slow

- Dependencies that communicate with external infrastructure

- Cases that are hard to simulate

- What not to mock:

- Entities

- Native libraries and utility methods

- Things that are simple enough

- Types you do not own. Write an abstraction on top of them

- Test doubles must be as faithful as possible.

- Prefer realism over isolation.

7. Design for Testability

- Testability is how easy it is to write an automated test for a system, method or class.

- Separate infrastructure code from domain code.

- Domain is where the core of the system lies, where all business rules, logic, entities, services, and similar elements reside.

- Infrastructure relates to all code that handles external dependencies (e.g. database, the web, filesystem calls).

- Take advantage of hexagonal architecture.

- Dependency injection and controlability

- DI is critical for better testing.

- Dependency inversion principle, a.k.a. interfaces, facilitate testing.

- Make your code observable

- It has to be easy for the rest of the code to inspect the class behavior.

- Sometimes it is OK to create helper methods for test purposes.

9. Writing Larger Tests

- When to use larger tests?

- You need to test the interaction of multiple classes.

- The class you want to test is a component in a large plug-and-play architecture.

- System tests

- We do not test a single unit of the system, but the whole system.

- We can use it to test the different “user journeys”.

- It covers a users’ entire interaction with the system to achieve some goal.

- They act as one path in an use-case.

- Patterns and best practices

- Provide a way to set the system to the state that the test requires (fixtures).

- Make sure each test always runs in a clean environment.

- Visit every step of a journey only when that journey is under test.

- Pass important configuration to the test suite.

10. Test Code Quality

- Principles of maintainable test code

- Tests should be fast.

- Tests should be cohesive, independent, and isolated.

- Tests should have a reason to exist.

- Tests should be repeatable and not flaky.

- Tests should break if the behavior changes.

- Tests should have a single and clear reason to fail.

- Tests should be easy to write.

- Tests should be easy to read.

- Tests should be easy to change and evolve.

- Test smells

- Excessive duplication.

- Unclear assertions.

- Bad handling of complex or external resources.

- Fixtures that are too general.

- Sensitive assertions.